2. Testing

This is the second guide in the 4-part Getting Started with Scroll series.

Goals

Once your knowledge base is ready, test your expert.

Testing serves three purposes:

Quality assurance - Put yourself in your audience’s shoes and run a few queries to confirm the answers meet your bar.

Knowledge optimization - A few sample queries will reveal knowledge gaps and low-quality sources, allowing you to update the knowledge base accordingly.

Expert guidelines tuning - Scroll lets you steer the expert toward specific behaviors. Test and iterate until it feels exactly right.

Ship only after you are confident in your expert's results.

How to Test

1. Running Queries

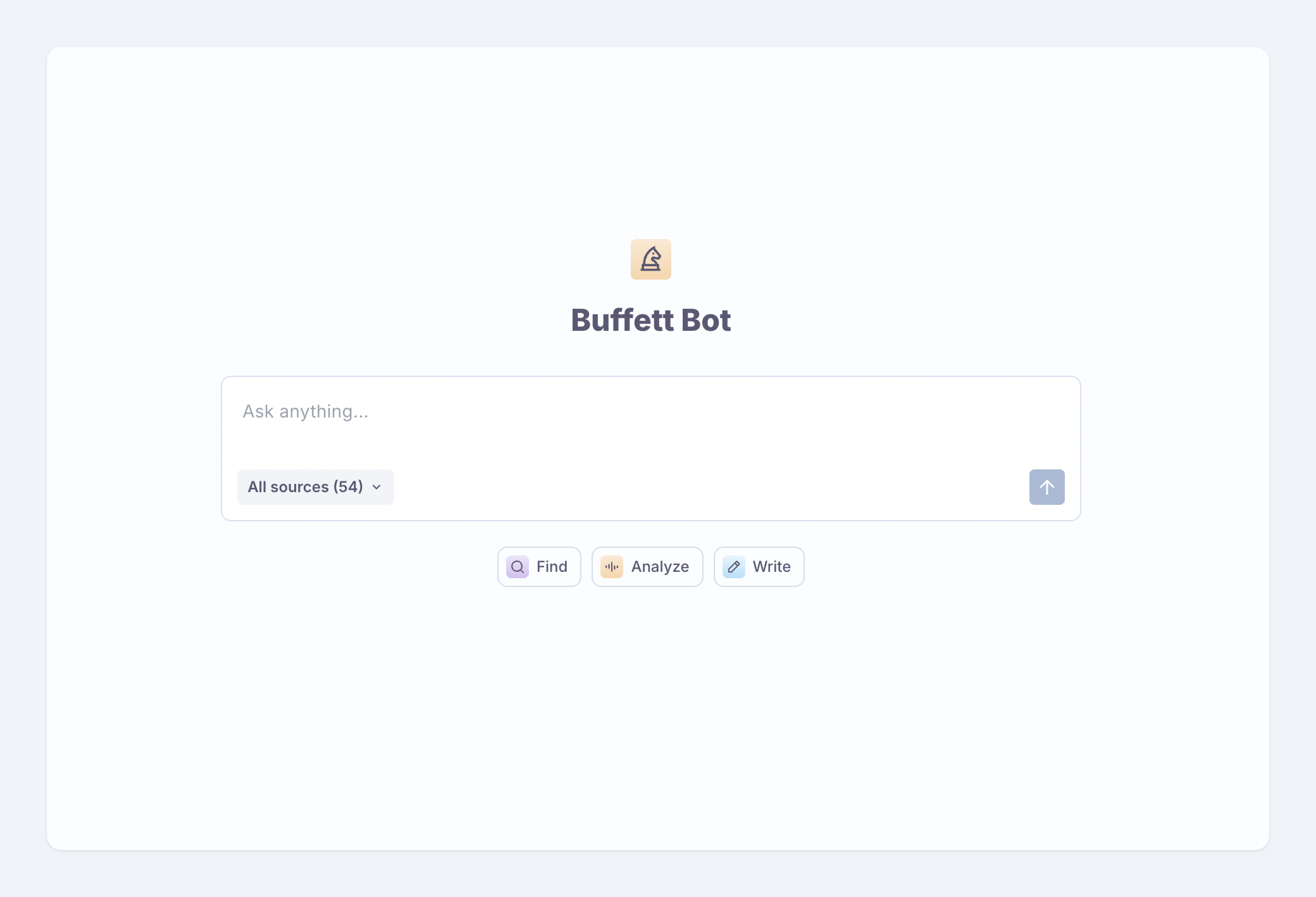

Go to the chat playground:

Type a question where it says Ask anything... .

Think about what your audience would ask. Don’t engineer prompts - your users won’t optimize their prompts either.

2. Reviewing sources

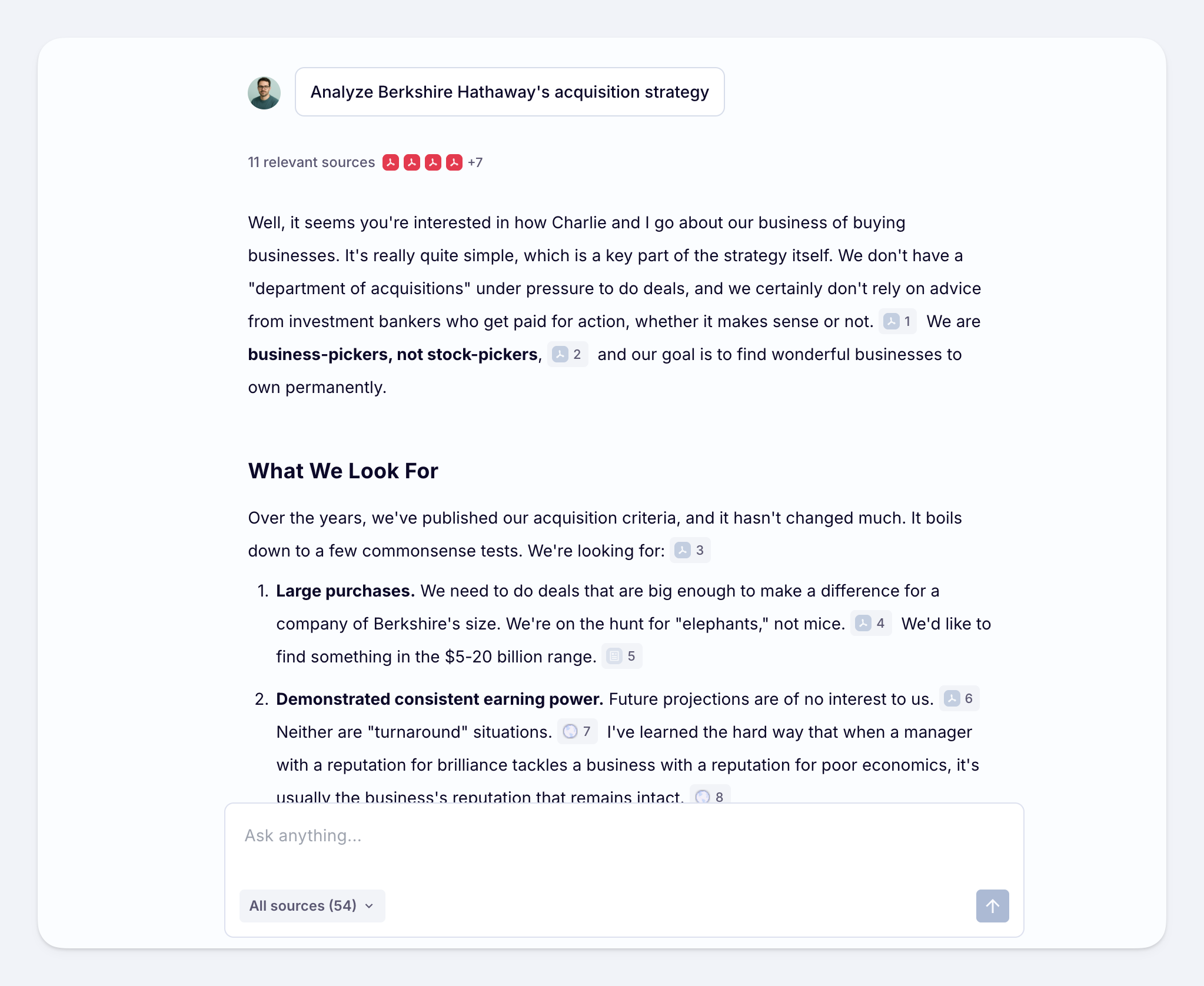

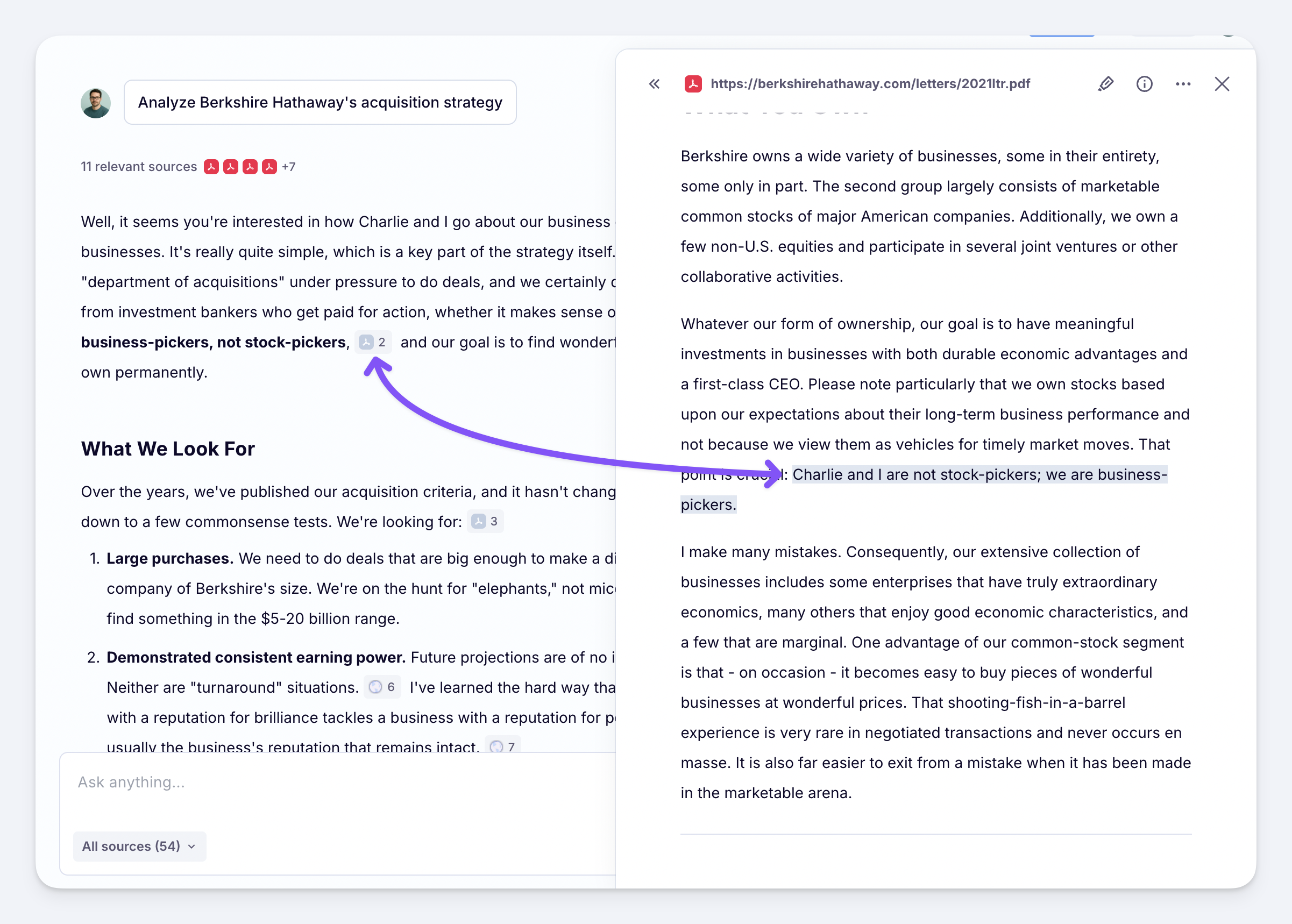

Click any citation marker to open the exact source passage used for that answer:

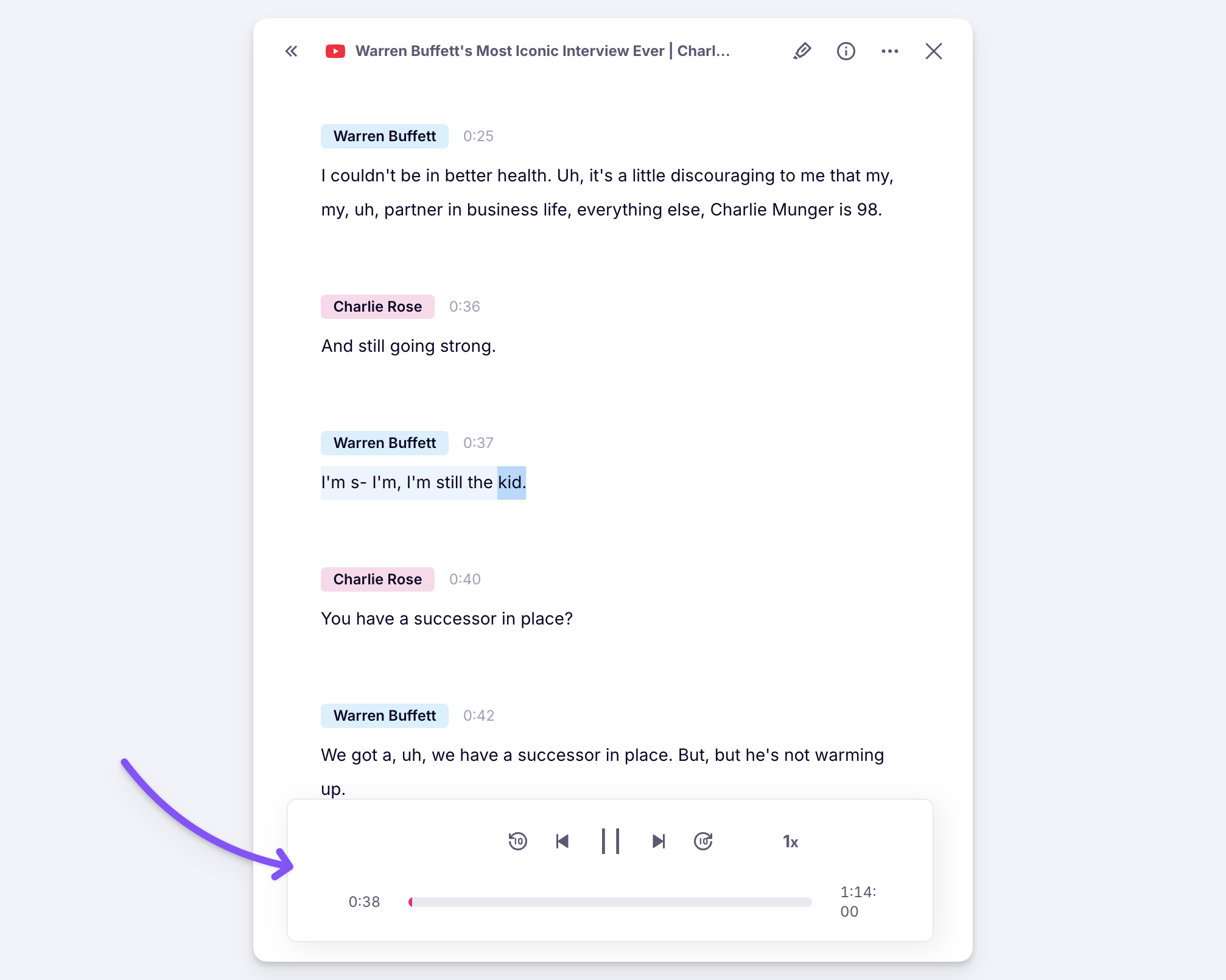

For audio sources, the built-in player lets you listen to the original audio.

3. Fixing Problems

As you read through AI answers, evaluate:

🎯 Accuracy: Are any claims factually wrong or misleading?

📚 Completeness: Does the answer omit important information?

🔍 Relevance: Does the answer drift off topic?

⚡ Decisiveness: Is the answer too vague or hedging unnecessarily?

✍️ Style: Does the tone and structure suit the intended audience and context?

If you spot gaps in accuracy or completeness, improve your knowledge base. Remove bad sources or add stronger ones.

If you do not have a preexisting source to add, create one. A useful knowledge creation hack is to record yourself or another person talking about the subject for ten minutes. No prep needed and you will be amazed how much ground you cover.

Issues related to relevance, decisiveness and style are behavioral problems. You can steer behavior by editing the expert guidelines. See Customization for details.

🤝 AI Experts and Trust

Nothing is more critical to the success of your AI expert than user trust.

It helps to think about AI experts the same way you think about human experts. Consider a neurosurgeon, a car mechanic and an IT consultant. We'd only seek their advice if we trust that they know what they are talking about.

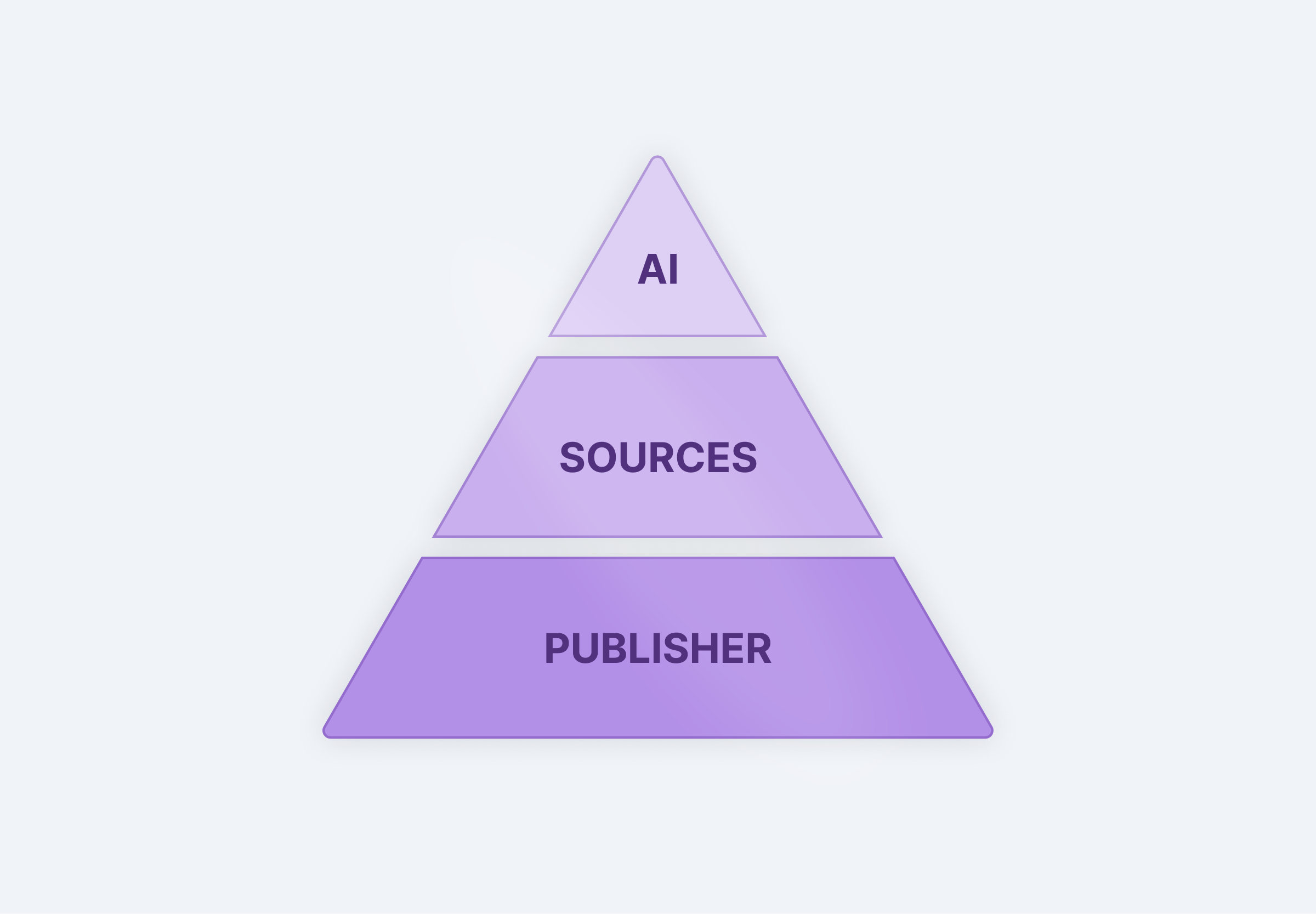

For human experts, trust comes from formal certifications and recommendations. For AI experts, it follows a different hierarchy:

Before anything else, users pay attention to who publishes the expert. Whether you are an individual, a company, or an employee, your personal brand is the first filter for trust.

Next are the sources you provide. An article from the Financial Times carries more weight than an anonymous Reddit post. A recent presentation from the company CTO is more credible than an old product spec written by an unknown junior employee.

Finally, the quality of the AI engine matters as well. Does it process sources correctly and with attention to detail. Does it understand the underlying knowledge. Does it ground every answer in evidence from the sources.

We share this framework here to encourage your to prioritize trust as you create, test and publish experts.

👉 Continue to the next chapter of the Getting Started guide: 3. Testing